Last updated on July 13th, 2024 at 11:09 am

We’ll discuss recurrence relations, recurrence, methods for solving recurrence relations – substitution method, recursion tree method, and master method, and its examples.

Table of Contents

1. Recurrence Relations

Recurrence relations are recursive definitions of mathematical functions or sequences.

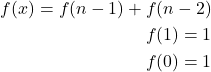

For example, the recurrence relation defines the famous Fibonacci sequence 1, 1, 2, 3, 5, 8, 13, …:

(1)

Given a function defined by a recurrence relation, we want to find a `closed form’ of the function. In other words, we would like to eliminate recursion from the function definition.

There are 3 methods to solve recurrence relations:

- Substitution method

- Recursion-tree Method

- Master Theorem

1.1 Recurrences

When an algorithm contains a recursive call to itself, its running time can often be described by a recurrence.

A recurrence is an equation that describes a function in terms of its value on smaller inputs.

For example, the worst-case running time T(n) of the merge-sort can be described as

(2)

The time complexity of the merge-sort algorithm in the worst-case is ![]()

2. Substitution Method

Substitution method consists of guessing an asymptotic (upper or lower) bound on the solution, and trying to prove it by induction.

In other words, using the substitution method, one has to guess the form of the solution.

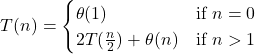

Consider the recurrence relation : ![]()

We guess that the solution is ![]() we have to prove that

we have to prove that

![]()

Assume that this bound holds for ![]()

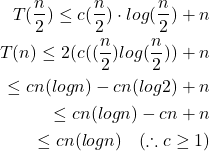

Example : What is the time complexity of the following recurrence relation

(3)

3. Recursion Tree Method

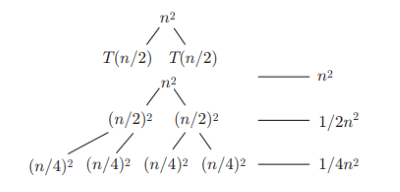

A recursion tree is useful for visualizing what happens when a recurrence is iterated. It represents a tree of recursive calls and the amount of work done at each call is shown with the help of a diagram.

The recursive tree method is good for generating guesses for the substitution method. It can be unreliable and promotes intuition.

In a recursion tree, each node represents the cost of a single sub-problem somewhere in the set of recursive function invocations. We sum the costs within each level of the tree to obtain a set of per-level costs, and then we sum all the per-level costs to determine the total cost of all levels of the recursion.

Recursion trees are useful when the recurrence describes the running time of a divide-and-conquer algorithm.

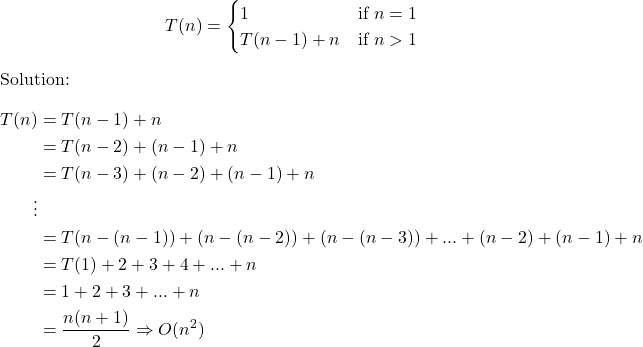

Example: Consider the recurrence below relation: T(n) = 2T(n\2)+n2

How deep does the tree go?

We stop at the leaf, and we know we are at a leaf when we have a problem of size 1:

![]()

The amount of work done is given as

![Rendered by QuickLaTeX.com \[ \sum_{i=0}^{log n}{\frac {n^i}{2}} = \Theta n^2 \]](https://stepscribes.com/wp-content/ql-cache/quicklatex.com-f86b074aea6497a105a7e2162d83f2a0_l3.png)

This is geometrically decreasing in size, so it would not get any bigger than ![]() .

.

4. Master Theorem

Master theorem provides a method to solve various recurrence relations. Although all the relations can’t be solved using this method, it is one of the useful methods of solving complex relations of the form:

![]()

where a and b are constants. f is a function of n. this recursive algorithm breaks up the input into sub-problems of size n/b each.

Three cases of master theorem are as follows:

1. If ![]() for some constant

for some constant ![]() , then

, then ![]()

2. if ![]() then

then ![]()

3. if ![]() for some consant

for some consant ![]() and if

and if ![]() for some constant

for some constant ![]() and all sufficiently large

and all sufficiently large ![]() , then

, then ![]() .

.

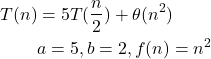

Example: Find the time complexity of the following recurrence relation(using Master Theorem) : T(n) = 5T(n\2)+θ(n2)

Solution:

Compare ![]() to

to ![]() .

.

So, time complexity = ![]() .

.

FAQs

How to solve recurrence relations?

Three methods to solve recurrence relations:

1. Substitution method

2. Recursion-tree Method

3. Master Theorem

How to solve recurrence relations by using the substitution method?

The substitution method for solving recurrences comprises two steps:

1. Guess the form of the solution.

2. Use mathematical inductions to find the constants and show that the solution works.